One thing you should already know is loading screens are usually static screens, but why? Basically, at that moment the game is loading or downloading all the required assets. That effort is taken by the CPU causing temporary accused drops of frame rate.

Due to that bounded CPU, most games try to keep the loading screen as light as possible in terms of performance. But what about using the GPU to create something catchy to entertain the user in the loading time? The key will be using a shader which doesn’t require any extra effort from the CPU.

Of course, even if you use a shader, don’t expect your loading screen will run totally smoothly. If the CPU gets totally bounded, the GPU will face a delay to be able to refresh the screen due to some areas of the rendering belongs to the CPU, so it could become a blocker. The main factor to use a shader is to avoid adding more work to the CPU.

Using a full-screen shader effect is not dramatic if it is the only thing you are doing now. One tip when you develop for mobile is avoiding create full-screen effects because of the high number of fragment operations you are doing. But in the case of a loading screen where you are only rendering that view, is the best candidate to show off a little and create a cool crazy effect, you will not face any overdraw problem or GPU bonding at this time.

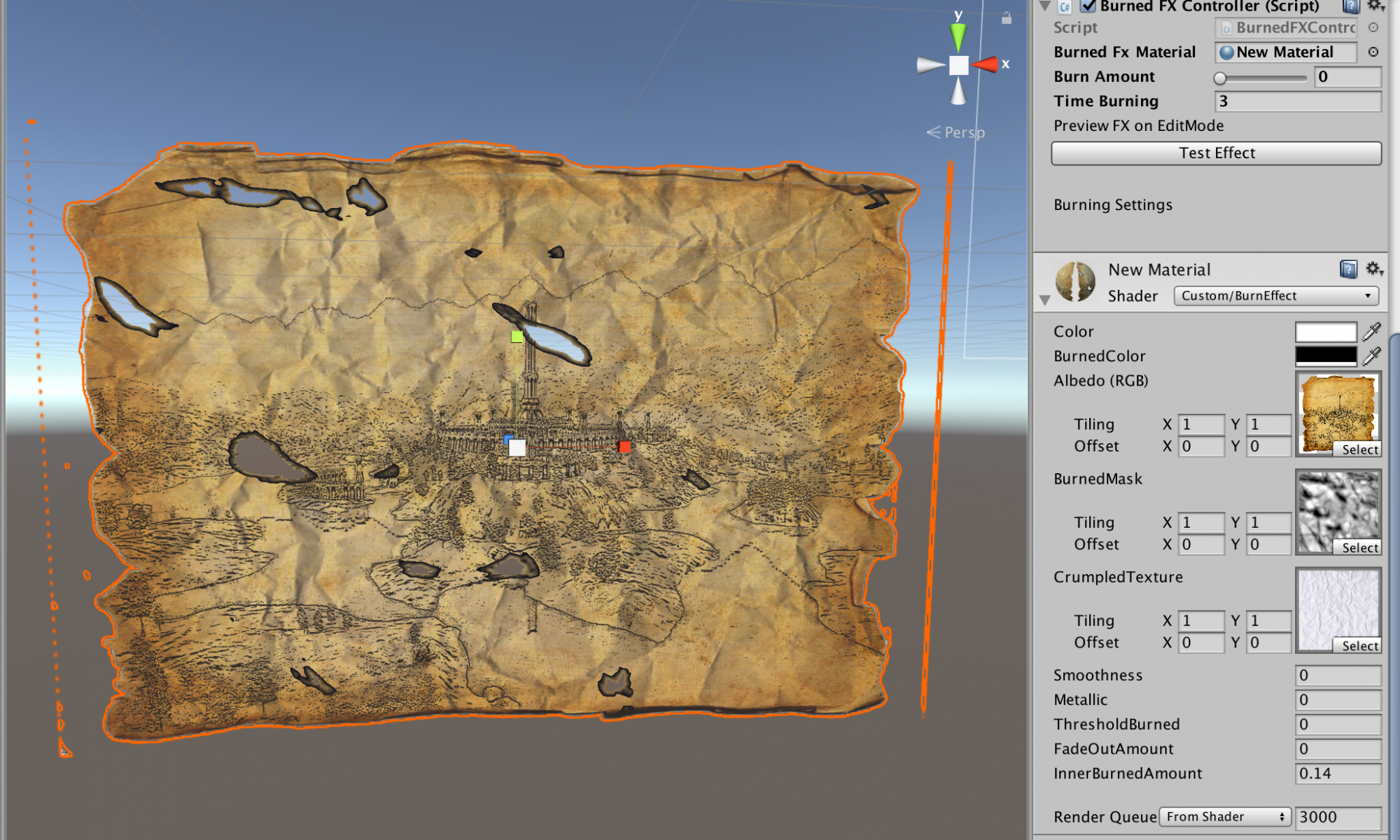

In this case, I did a tunnel effect: only with one shader and a few textures we can create a complex, appealing, and eye-catcher shader which will entertain our users in the loading time. The code is exposed below. There is nothing special about it in terms of tricks. It is just a good mix of textures, sampling parameters, timing, and color blending.

Shader "Unlit/TunnelShader"

{

Properties

{

_MainTex ("Texture", 2D) = "white" {}

_SecondTexture ("Texture", 2D) = "white" {}

_RingTexture ("_RingTexture", 2D) = "black" {}

_EdgeTexture ("_EdgeTexture", 2D) = "black" {}

_PlasmaTexture ("_PlasmaTexture", 2D) = "black" {}

_Color ("Tint Plasma", Color) = (1,1,1,1)

_ColorBackground ("Tint Background", Color) = (1,1,1,1)

_scaleTopEdgeFactor("scaleTopEdgeFactor", Range(0,1)) = 1

_SpiralFactor("_SpiralFactor", Range(0,10)) = 1

_speed("Speed", Range(0,20)) = 1

_speedRotation("Speed Rotation", Range(0,20)) = 1

_brightness("Brightness", Range(0,20)) = 1

_tunnelEffect("tunnelEffect", Range(1,10)) = 1

}

SubShader

{

Tags { "RenderType"="Opaque" }

LOD 100

Cull Front

Pass

{

CGPROGRAM

#pragma vertex vert

#pragma fragment frag

// make fog work

#pragma multi_compile_fog

#include "UnityCG.cginc"

struct appdata

{

float4 vertex : POSITION;

float2 uv : TEXCOORD0;

};

struct v2f

{

float2 uv : TEXCOORD0;

UNITY_FOG_COORDS(1)

float4 vertex : SV_POSITION;

};

sampler2D _MainTex;

sampler2D _SecondTexture;

sampler2D _RingTexture;

sampler2D _EdgeTexture;

sampler2D _PlasmaTexture;

float4 _MainTex_ST;

float4 _SecondTexture_ST;

float4 _PlasmaTexture_ST;

fixed _scaleTopEdgeFactor;

float _speed;

float _brightness;

float _tunnelEffect;

fixed4 _Color;

fixed4 _ColorBackground;

fixed _speedRotation;

fixed _SpiralFactor;

v2f vert (appdata v)

{

v2f o;

float2 offsetCone = v.vertex.xz* _scaleTopEdgeFactor * ( 1+v.vertex.y);

v.vertex.xz = v.vertex.xz - offsetCone;

//v.vertex *= _scaleTopEdgeFactor;

o.vertex = UnityObjectToClipPos(v.vertex);

o.uv = TRANSFORM_TEX(v.uv, _MainTex);

UNITY_TRANSFER_FOG(o,o.vertex);

return o;

}

fixed4 frag (v2f i) : SV_Target

{

// sample the texture

fixed4 firstSampling = tex2D(_SecondTexture , float2( i.uv.x + _Time.x*2*_speedRotation* _SecondTexture_ST.x +i.uv.y * _SpiralFactor, _SecondTexture_ST.y * pow(i.uv.y,_tunnelEffect)*2 + _Time.y * _speed));

fixed4 secondSampling = tex2D(_MainTex ,float2( i.uv.x + _Time.x*_speedRotation +i.uv.y *_SpiralFactor, pow(i.uv.y,_tunnelEffect) + _Time.y * _speed)) * _ColorBackground;

fixed4 thirdSampling = tex2D(_MainTex ,float2( i.uv.x + _Time.x*_speedRotation +i.uv.y * _SpiralFactor, pow(i.uv.y,_tunnelEffect)*2 + _Time.y * _speed)) *_ColorBackground;

fixed4 ringTexture = tex2D(_RingTexture ,float2( i.uv.x + _Time.x*_speed*2 +i.uv.y *_SpiralFactor, i.uv.y))*2;

fixed4 edgeTexture = tex2D( _EdgeTexture,float2( i.uv.x + _Time.x*_speed*4 +i.uv.y *_SpiralFactor , i.uv.y))*0.7 * _Color;

fixed4 edgeTextureTwo = tex2D( _EdgeTexture,float2( i.uv.x - _Time.x*_speed*8 +i.uv.y *_SpiralFactor, i.uv.y))*0.7;

fixed4 plasmaTexture = tex2D( _PlasmaTexture,float2( i.uv.x*_PlasmaTexture_ST.x - _Time.x*_speed*_speedRotation +i.uv.y * _SpiralFactor, pow(i.uv.y,_tunnelEffect)*_PlasmaTexture_ST.y+ _Time.x*4)) *pow(firstSampling,4)*3* _Color;

fixed4 col = plasmaTexture + edgeTextureTwo+ edgeTexture+ ringTexture * firstSampling *secondSampling* thirdSampling *_brightness;

// apply fog

UNITY_APPLY_FOG(i.fogCoord, col);

return col;

}

ENDCG

}

}

}